The CLT is a statistical concept that describes the behavior of the mean of a random sample drawn from any distribution. The distribution of the sample means approaches a normal distribution as the sample size grows, regardless of the initial population distribution's shape. In other words, the sample mean is normally distributed regardless of the underlying population distribution. The central limit theorem is based on three main assumptions: Independence: The samples must be independent of each other. Randomness: The samples must be randomly selected. Sample size: The sample size must be sufficiently large (usually n > 30). The central limit theorem is important in statistical inference and hypothesis testing because it allows us to make assumptions about the population distribution based on sample statistics. For example, if we know the sample mean and standard deviation, we can use the CLT to estimate the population mean accurately. The relationship between the CLT and the law of large numbers (LLN) is essential in understanding how sample data can be used to make inferences about populations, especially when the population distribution is unknown. The law of large numbers indicates that the sample mean will approach the true population means as the sample size increases. The CLT extends the law of large numbers by stating that the sample mean will also be normally distributed as the sample size increases, regardless of the underlying population distribution. The CLT has many applications in statistical analysis and in the real world. One of the most common applications is in estimating population parameters using sample statistics. For example, if we are interested in estimating the average height of a population, we can take a random sample of individuals and use the central limit theorem to estimate the population means with a high degree of accuracy. Confidence intervals and margin of error are also important applications of the central limit theorem. A range of values known as a confidence interval will likely include the population parameter of interest. The degree of mistake we are prepared to accept when estimating the population parameter is known as the margin of error. Both confidence intervals and margin of error are affected by sample size, and the CLT plays a crucial role in determining the appropriate sample size for accurate estimates. Hypothesis testing is another area where the Central Limit Theorem is widely used. Hypothesis testing involves testing a claim or hypothesis about a population parameter using sample data. The CLT is used to compute the test statistic, which is then used to determine the probability of observing the sample mean if the null hypothesis is true. The p-value is the probability of observing a sample mean as extreme as the one we have if the null hypothesis is true. A p-value less than the significance level indicates that the null hypothesis can be rejected in favor of the alternative hypothesis. Real-world examples of problems that use the CLT include quality control in manufacturing, election polling, and medical research. In quality control, the CLT is used to determine whether a production process is operating within acceptable limits. In election polling, the CLT is used to estimate the proportion of voters who support a particular candidate. In medical research, it is used to estimate a treatment's efficacy by comparing the treatment group's outcomes with those of the control group. While the central limit theorem is a powerful tool for statistical analysis, it is not applicable in all situations. One of the main limitations of the CLT is that it assumes that the samples are drawn from a population with a finite variance. The CLT does not hold if the population variance is infinite. Another limitation of the CLT is that it assumes that the samples are independent of each other. If the samples are not independent, the CLT does not hold. There are alternative approaches to dealing with non-normal distributions, such as using non-parametric methods. Non-parametric methods do not make any assumptions about the shape of the population distribution and are, therefore, more robust than parametric methods in some situations. The CLT is also the foundation for advanced statistical concepts such as Bayesian statistics and machine learning. Bayesian statistics is a method of statistical inference that uses probability distributions to represent uncertainty. Using algorithms to learn from data and make predictions is the key feature of machine learning, a popular data analysis method. The central limit theorem is a crucial concept for statistical analysis, enabling accurate estimations of population parameters using sample statistics. Its importance lies in its wide range of applications, including quality control, election polling, and medical research. However, it is essential to note that the CLT has limitations and may not always be applicable. Alternative approaches, such as non-parametric methods, may be used in such situations. Understanding the CLT is crucial for students, researchers, and professionals in fields requiring statistical analysis. Hiring a financial advisor who specializes in wealth management is recommended to make informed financial decisions. Doing so can increase your chances of achieving your financial goals while minimizing risks.What Is the Central Limit Theorem (CLT)?

Relationship Between CLT and the Law of Large Numbers

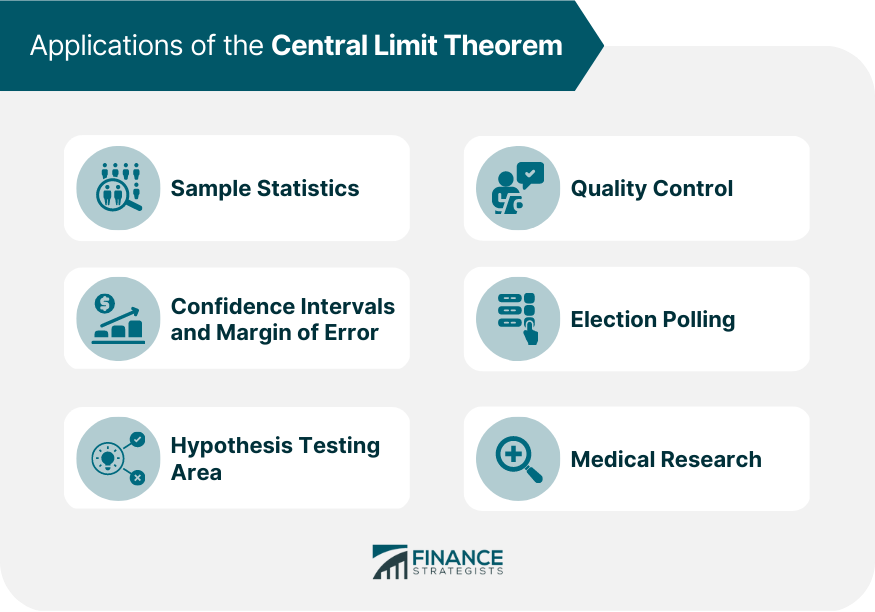

Applications of the Central Limit Theorem

Sample Statistics

Confidence Intervals and Margin of Error

Hypothesis Testing Area

Real-World Applications

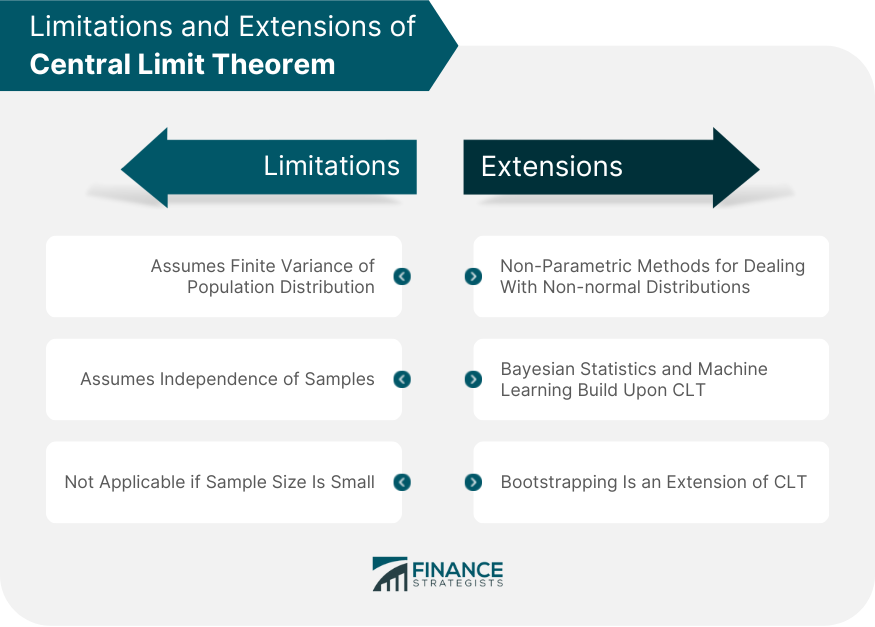

Limitations and Extensions of Central Limit Theorem

Conclusion

Central Limit Theorem (CLT) FAQs

The central limit theorem is a statistical concept that describes the behavior of the mean of a random sample drawn from any distribution. It states that as the sample size increases, the distribution of the sample means approaches a normal distribution, regardless of the shape of the original population distribution.

The central limit theorem is based on three main assumptions: independence, randomness, and sample size. The samples must be independent of each other, randomly selected, and the sample size must be sufficiently large, usually n > 30.

The Central Limit Theorem has many applications in statistical analysis, including estimating population parameters using sample statistics, confidence intervals and margin of error, and hypothesis testing. It is widely used in quality control, election polling, and medical research.

The central limit theorem assumes that the samples are drawn from a population with finite variance and that the samples are independent of each other. If these assumptions do not hold, the Central Limit Theorem may not be applicable. Alternative approaches, such as non-parametric methods, may be used in such situations.

Understanding the central limit theorem is crucial for making informed decisions based on data, particularly in fields that require statistical analysis. By understanding the central limit theorem, you can estimate population parameters using sample statistics with a high degree of accuracy. This knowledge can help you make better decisions and achieve your financial goals.

True Tamplin is a published author, public speaker, CEO of UpDigital, and founder of Finance Strategists.

True is a Certified Educator in Personal Finance (CEPF®), author of The Handy Financial Ratios Guide, a member of the Society for Advancing Business Editing and Writing, contributes to his financial education site, Finance Strategists, and has spoken to various financial communities such as the CFA Institute, as well as university students like his Alma mater, Biola University, where he received a bachelor of science in business and data analytics.

To learn more about True, visit his personal website or view his author profiles on Amazon, Nasdaq and Forbes.